Workform

From Idea to AI MVP: Building Workform with Refact

- Product Design

- MVP Development

- AI Agent Development

- API Integrations

- UI/UX Design

The Client

A project management consultant with years of experience leading complex projects. He runs training programs, consults for teams, and built a community of project managers. He came to Refact to build an AI assistant that could help project managers stay on top of their work by connecting information scattered across Slack, email, Asana, and meetings.

The Challenge

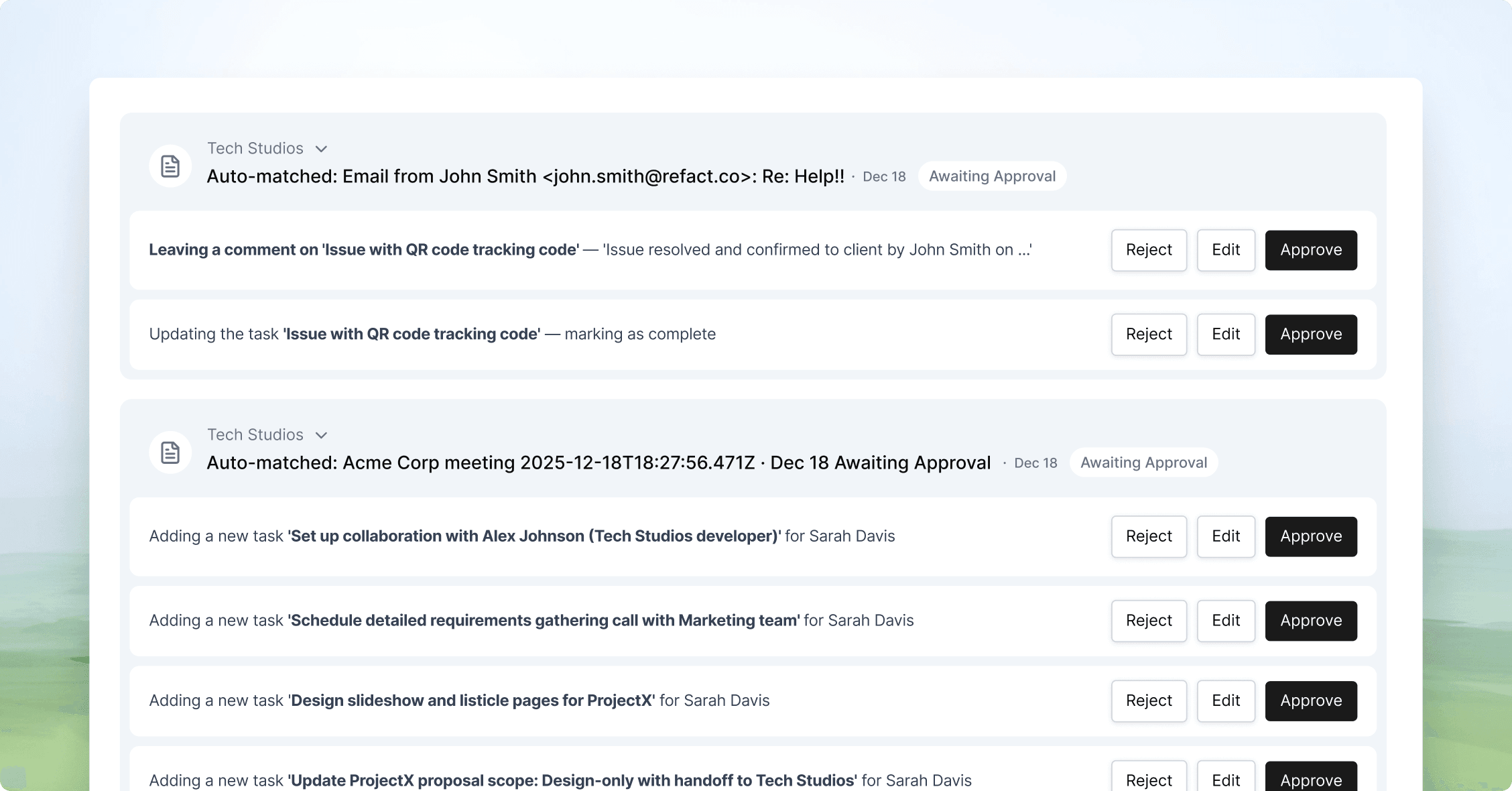

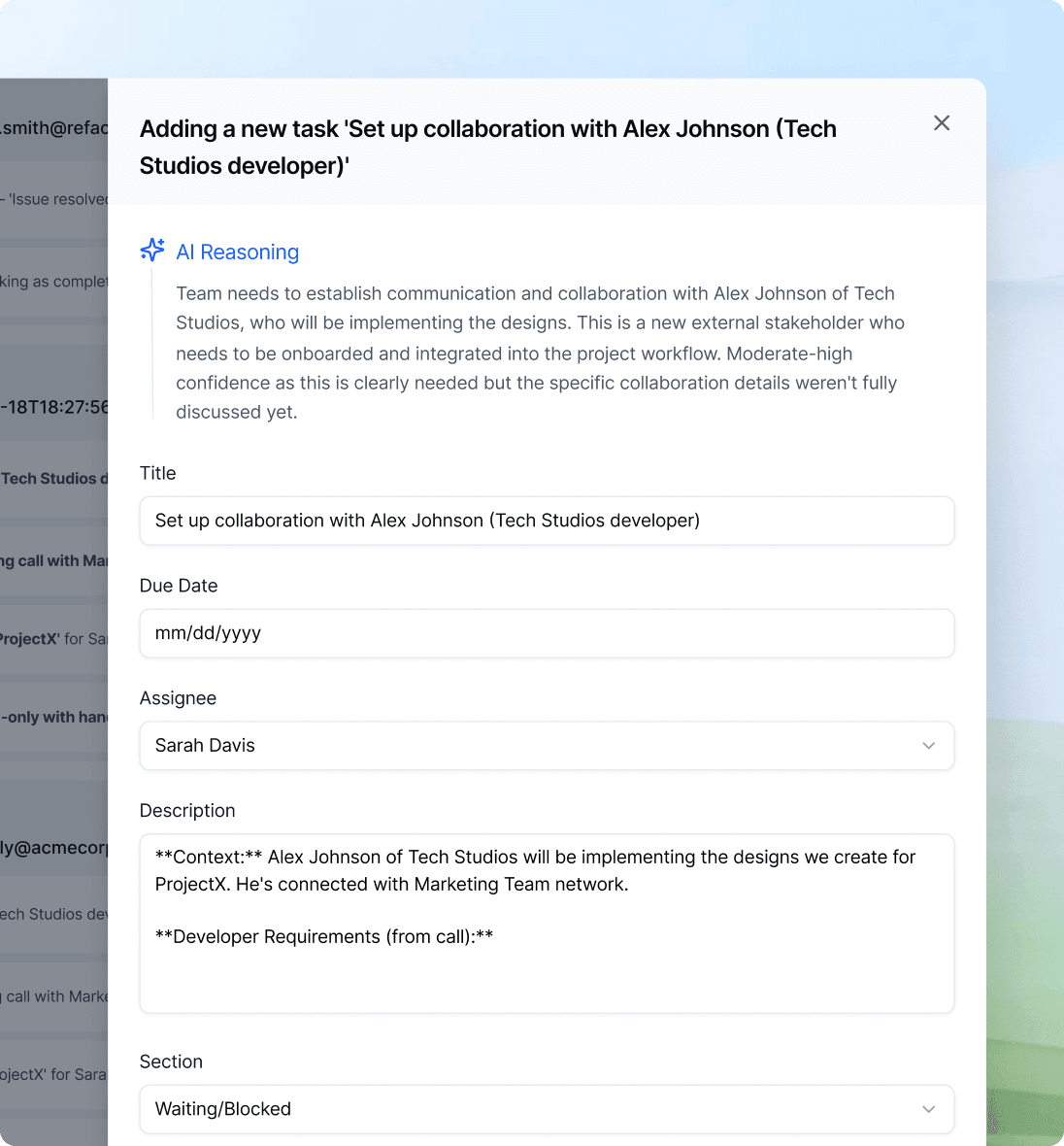

The initial concept was broad: an AI assistant that helps project managers with “everything.” Through our blueprint process, we narrowed the scope to a focused MVP and reframed the product. Instead of building a task generator or a standalone PM tool, we would build an AI assistant that actually understands projects by ingesting and connecting data from multiple sources and also can keep the project manager boards up to date.

Features cut or saved for later: chat interface, standalone project management tool, and several secondary workflows. The first version would focus on core workflows and integrate with tools project managers already use.

What We Built

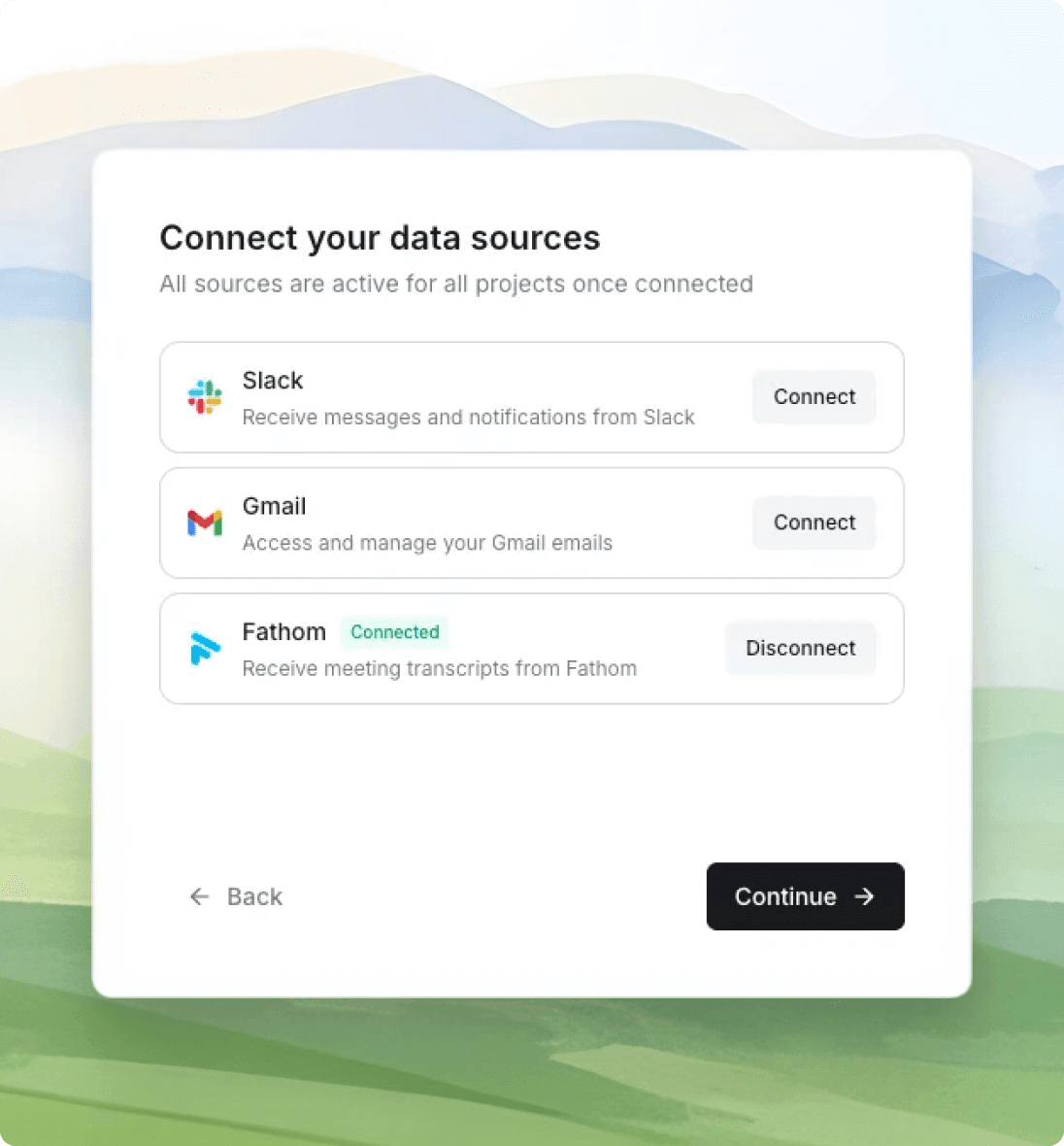

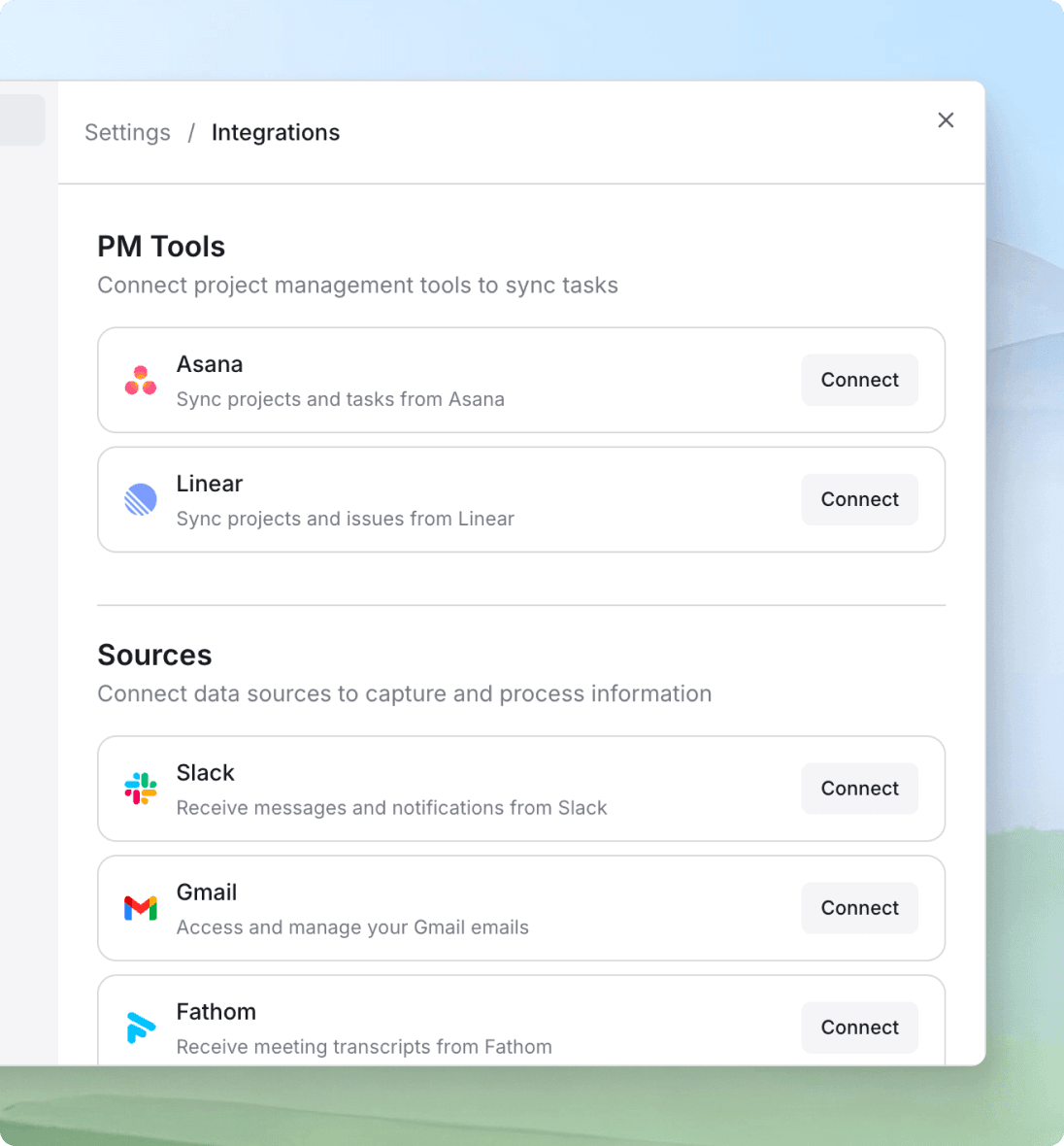

The MVP integrates with four sources: Slack, Gmail, Asana, and meeting transcripts. The system processes incoming information in real time, connects related threads across sources, and maintains comprehension of what’s happening across a project.

Architecture

We tested four major architectural approaches before landing on the current system. The core technical problem is context engineering: the AI needs to understand that a login bug mentioned in Slack and a related Flutter issue discussed in an email two days later belong to the same topic.

Language models have limited context windows, and more information doesn’t guarantee better results. We developed techniques for compressing context, recovering compressed data when needed, and deciding what information belongs in hot memory versus cold storage.

The Slack Integration

Of the four integrations, Slack was the most difficult. Messages are short and disconnected. Data about a single topic spreads across multiple threads, channels, and days. The API is difficult to work with, bot access has its own challenges, and search functionality is limited. Even Slack hasn’t shipped meaningful AI features on their own platform.

We built custom pipelines that reconstruct context from fragmented conversations and connect related threads across time.

Model Selection

We matched models to tasks rather than defaulting to one model for everything. The main agent runs on OpenAI’s most capable models. For scenarios involving many tools, Anthropic performed better. For simpler processing tasks where speed matters more than reasoning depth, we use smaller models like GPT-4o mini.

Response times range from a few seconds for simple operations to a minute or two for complex multi-step tasks.

Infrastructure Decisions

We outsourced authentication and tool-calling to Composio rather than building from scratch, which provided enterprise-grade scalability without months of custom development. We added observability early to debug issues quickly. We avoided popular agentic frameworks like LangChain that don’t hold up in production.

We also made a deliberate decision to avoid building complex scaffolding for limitations that will disappear with better models. The codebase is lean enough to evolve as the technology improves.

The Result

Working MVP in beta testers’ hands. Users report saving significant time weekly on administrative work. The architecture is built to scale and evolve as AI technology advances.

We continue working together on subsequent phases.

A reliable, trustworthy, and resourceful partner that understands technology and how to build and launch successful software. Especially for non-technical people who have domain expertise but don't have the background to understand what goes into building software.

Saeedreza Abbaspour